Emotional Generative Models (GeMs)

How emotionally intelligent are Generative Models?

Generative Models (GeMs) have been through rapid developments in the past months. It is undisputed that these models will have tremendous effects on design research, design education, design practice and the sciences in general. Text models like GPT-3 may help designers communicate their ideas better by enhancing their writing and text-to-image models (e.g., DALL-E 2, StableDiffusion, MidJourney) may open a whole new modality of creative thinking. With these models are becoming more accessible, academics and industrial researchers note that it is important that GeMs align with human intent.

To align AI, one promising venture may be to improve the emotional intelligence of such models. Emotional intelligence in humans is linked to higher levels of wellbeing. Thus, enhancing the emotional intelligence of GeMs may lead to models that will be able to support human wellbeing by understanding their experiences better, and help people to develop higher emotional intelligence and support them in expressing their emotions better.

How then may we improve the emotional intelligence of GeMs? While emotional intelligence in humans exists of several different facets (e.g., self-awareness, empathy, emotional regulation), there is one characteristic that may directly apply to GeMs: emotional granularity or emotion differentiation.

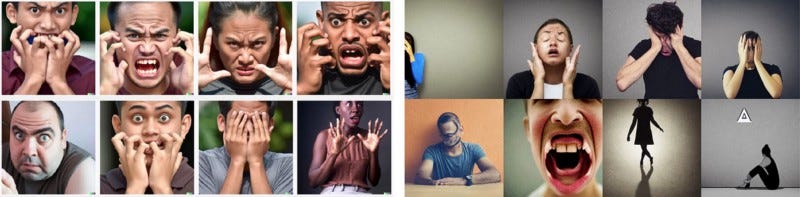

Before we are looking to enhance emotional granularity of GeMs, what is the current state of the system? Let’s test this by having DALL-E and StableDiffusion generate emotion-laden images. For this exploration, the 6 basic emotions (anger, surprise, disgust, enjoyment, fear, and sadness) of Ekman will be used.

DALL·E (left) compared to StableDiffusion (right)

Note that in conventional emotion theory, an emotion always has 1) a trigger, 2) an experience, 3) an expression. Since the visual medium is good for expression, we will start off our exploration by generating images of people expressing emotions.

The prompt was “a person expressing [emotion]”

But wait, weren’t there 6 basic emotions? Apparently, using the word “disgust” is against OpenAI’s policy. Nonetheless, SD came up with the terrifying images below…

There is emotional granularity, but…

…what strikes the eye, is that the content of the images varies greatly between DALL·E and SD. Regardless, both models make use of the same neural network, CLIP (correct me if I’m wrong), so the differences are not caused by semantics.

Thus, one can only speculate why these models produce images with such different content — DALL·E seems to “understand” the emotion better, while SD provides more variation between the images, including the image’s context. Feel free to reach out if you have insights on the matter.

Despite their differences, it is clear that both models can differentiate between the 6 basic emotions of Ekman — to no one’s surprise. However, emotional granularity extends beyond just these 6 emotions. Rather, researchers have shown that there are at least 63 distinct emotions (38 negative, 25 positive). These emotions have been brought together in the Emotion Typology which differentiates among different emotions based on their 1) trigger, 2) experience, and 3) facial expression — in which an emotion is distinct when at least 2 out of 3 characteristics are different from other emotions.

Thus, how well does DALL·E fare when we ask it to express emotions that are close to each other, yet distinct? Let’s begin with the first six negative emotions:

We can see that the DALL·E “understands” that the emotions are not the same, or rather, there’s emotional granularity in CLIP. However, the manner in which they are depicted can hardly be described as accurate — looking at you “weird-guy-dissatisfaction.” Future work may benefit from labeling emotion-laden images through human feedback in order to develop a dataset to fine-tune CLIP and compare it to other models.

Conclusions

In the vein of aligning artificially intelligent systems with human intent, we may look to improve the emotional granularity (the ability to differentiate between emotions) of Generative Models (GeMs) such as DALL·E and StableDiffusion to improve their perceived emotional intelligence. Currently, the models show a degree of emotional granularity in the sense that they can differentiate well between the six basic emotions. However, this becomes more difficult for distinct but similar emotions like anger, indignation, and annoyance. Future work should look into fine-tuning CLIP based on a dataset of human-labeled, emotion-laden imagery.