Wellbeing, what else?

Prioritizing human flourishing in AI alignment

As AI systems become more advanced and ubiquitous, the question of AI alignment—ensuring that these systems operate in accordance with human values and intentions—becomes increasingly crucial. In this blog post, I argue that the guiding value in AI alignment should be human wellbeing. To support this position, I will draw upon the work of philosopher Sam Harris, particularly his book "The Moral Landscape," which provides a compelling case for grounding morality in the promotion of human flourishing.

What is AI Alignment?

AI alignment refers to the challenge of designing AI systems that pursue the intended objectives of their creators while respecting broader human values and ethical principles. Misalignment occurs when an AI system single-mindedly pursues a narrow goal without regard for potential negative consequences on human wellbeing. The canonical example of a misaligned AI is Nick Bostrom's “paperclip optimizer”—an AI tasked with maximizing paperclip production that ultimately consumes all available resources, including those necessary for human survival, in pursuit of this goal.

While such extreme scenarios may seem far-fetched, the underlying problem of value alignment is relevant to the AI systems we interact with daily. Platforms like Facebook, YouTube, and TikTok employ AI algorithms designed to maximize user engagement and revenue, which can inadvertently promote the spread of misinformation, exacerbate mental health issues, and undermine privacy. As AI systems become more sophisticated and influential, aligning their objectives with human wellbeing becomes a necessity.

The Moral Landscape and Wellbeing

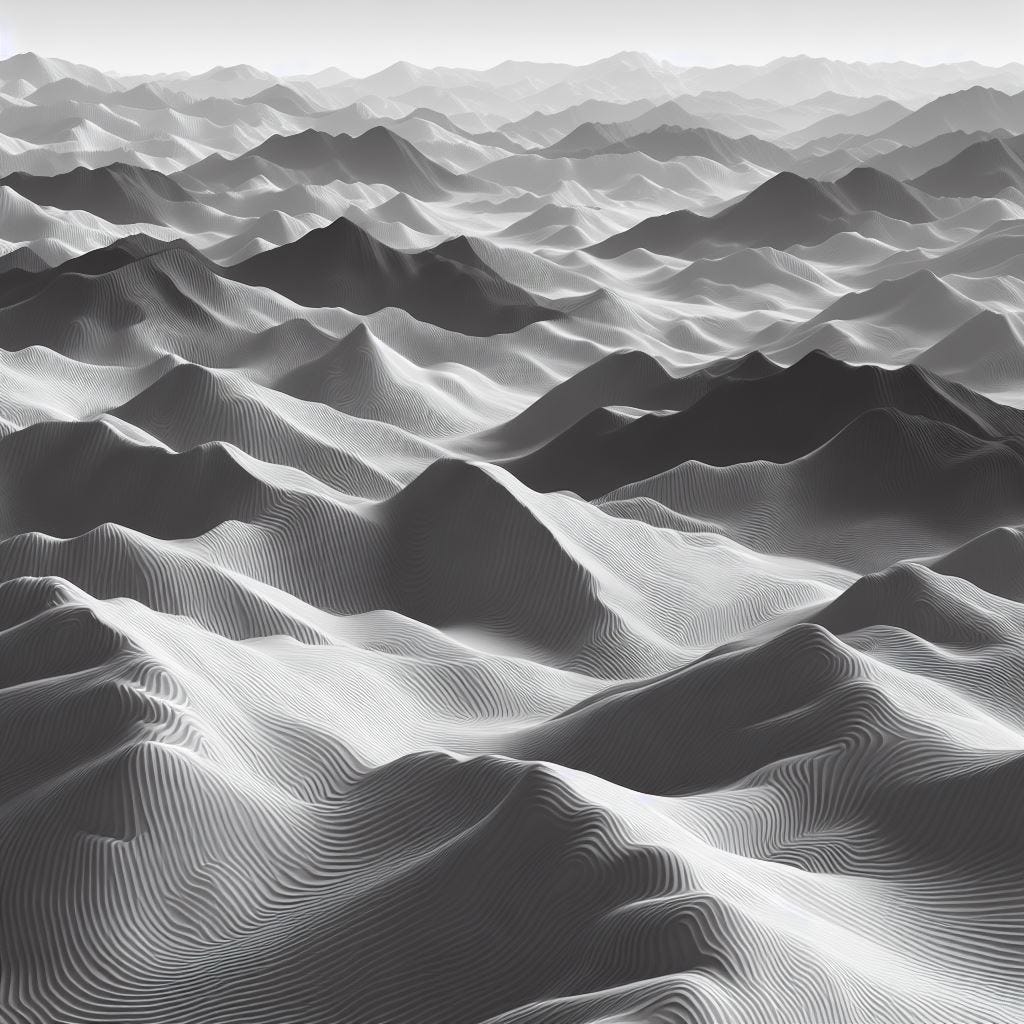

In "The Moral Landscape," Sam Harris argues that human wellbeing should be the foundation of morality. He proposes that we can think of morality in terms of a landscape, with peaks representing the heights of human flourishing and valleys representing the depths of suffering (Fig. 1). Movement across this landscape corresponds to changes in the conscious experiences of sentient beings. Harris contends that any system of values that does not ultimately connect to the wellbeing of conscious creatures is meaningless.

This perspective has profound implications for resolving conflicts between competing values. When values appear to clash, such as privacy versus security or freedom of expression versus protection from harm, Harris argues that we should prioritize the option that best promotes overall human wellbeing. Values like privacy and freedom of expression are important precisely because of their impact on human flourishing. By grounding morality in the reality of conscious experience, Harris provides a framework for navigating ethical dilemmas and working towards moral truth.

Implications for AI Alignment

Applying Harris's arguments to the challenge of AI alignment suggests that human wellbeing should be the ultimate value guiding the development and deployment of AI systems. An AI system aligned with human wellbeing would be designed to respect and promote the full range of human values that contribute to flourishing, such as physical and mental health, social connection, autonomy, and self-actualization. It would not pursue narrow objectives at the expense of these broader values.

Crucially, wellbeing is not a static end-state but an ongoing process that requires continuous engagement and adaptation. Just as ecosystems are never in perfect equilibrium but are constantly evolving and adjusting (Fig. 2), AI alignment should be seen as a dynamic conversation rather than a fixed destination. We must create feedback loops that allow AI systems to learn from and respond to the evolving needs and values of the humans they interact with.

This perspective highlights the importance of contestability and human oversight in AI systems. Users should have the ability to challenge and provide input on the decisions and outputs of AI, ensuring that these systems remain responsive to individual and societal values. Designers and developers of AI must prioritize transparency, explainability, and accountability, enabling the public to understand how these systems operate and to hold them to account when they fail to align with human wellbeing.

Potential Objections and Responses

1. Wellbeing is subjective and cannot be scientifically defined or measured.

While there is no single, universally agreed-upon definition of wellbeing, there are common elements that contribute to human flourishing across cultures, such as physical and mental health, positive relationships, a sense of purpose, and a sense of community. These factors can be studied empirically and used to guide the development of AI systems.

2. Focusing solely on wellbeing could lead to the neglect of other important values.

Harris argues that wellbeing is capacious enough to encompass all the values that truly matter to conscious beings. Principles like fairness, autonomy, and justice are ultimately valuable because of their impact on the lived experiences of humans and other sentient creatures.

3. Implementing a wellbeing framework for AI alignment is practically challenging.

While there are undoubtedly practical hurdles to overcome, recognizing wellbeing as the guiding value for AI alignment provides a clear direction for research and development efforts. By prioritizing human flourishing, we can make incremental progress towards more beneficial and less harmful AI systems, and methods scaffolding this process are emergent.

4. Optimizing for wellbeing could justify paternalistic AI systems that limit human freedom.

Respect for autonomy and individual liberty are key components of human wellbeing. An AI system aligned with human flourishing would seek to empower rather than constrain human agency, recognizing that self-determination is essential to a life well-lived.

5. A focus on human wellbeing neglects the importance of environmental sustainability.

The wellbeing of both present and future generations depends on the health and stability of the planet's ecosystems. An AI system genuinely committed to human flourishing would necessarily prioritize environmental sustainability as a precondition for long-term welfare.

Conclusion

The rapid advancement of AI presents both immense opportunities and profound risks for humanity. To ensure that these powerful technologies benefit rather than harm us, we must grapple with the challenge of AI alignment. By recognizing human wellbeing as the ultimate value to be promoted and protected, we can create a moral framework for the development and governance of AI systems.

This framework is not a static blueprint but a call for ongoing dialogue and reflection. As our understanding of wellbeing evolves, so too must our approach to AI alignment. By grounding the development of AI in the realities of human experience and remaining open to contestation and revision, we can work towards a future in which these technologies truly serve the flourishing of all.

So to the question of what the “North Star metric” of AI alignment should be, I say: Wellbeing, what else?

Written by Willem van der Maden, wiva@itu.dk